This post has been on my back burner for well over a year. This has bothered me, because every month that goes by I become more convinced that anonymous authentication the most important topic we could be talking about as cryptographers. This is because I’m very worried that we’re headed into a bit of a privacy dystopia, driven largely by bad legislation and the proliferation of AI.

But this is too much for a beginning. Let’s start from the basics.

One of the most important problems in computer security is user authentication. Often when you visit a website, log into a server, access a resource, you (and generally, your computer) needs to convince the provider that you’re authorized to access the resource. This authorization process can take many forms. Some sites require explicit user logins, which users complete using traditional username and passwords credentials, or (increasingly) advanced alternatives like MFA and passkeys. Some sites that don’t require explicit user credentials, or allow you to register a pseudonymous account; however even these sites often ask user agents to prove something. Typically this is some kind of basic “anti-bot” check, which can be done with a combination of long-lived cookies, CAPTCHAs, or whatever the heck Cloudflare does:

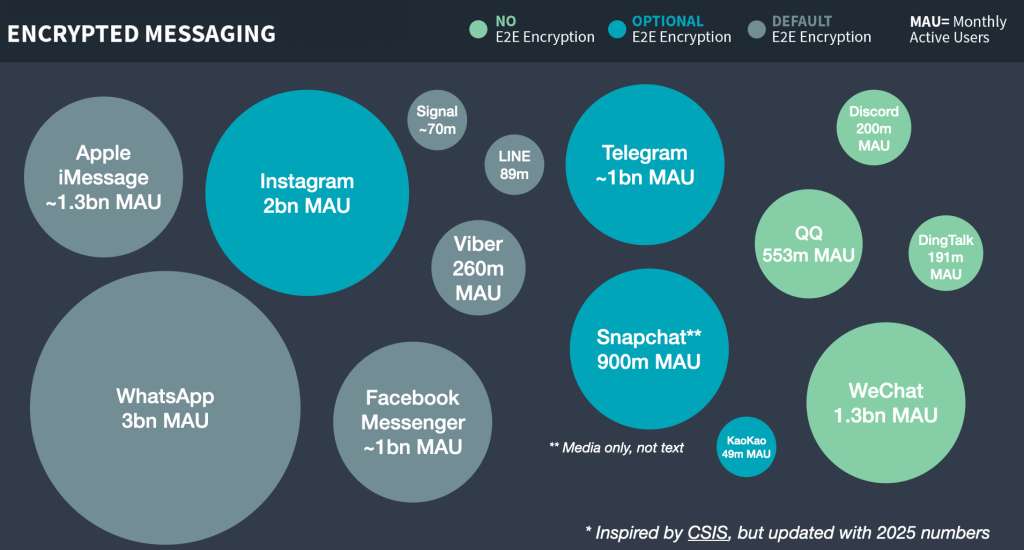

The Internet I grew up with was always pretty casual about authentication: as long as you were willing to take some basic steps to prevent abuse (make an account with a pseudonym, or just refrain from spamming), many sites seemed happy to allow somewhat-anonymous usage. Over the past couple of years this pattern has changed. In part this is because sites like to collect data, and knowing your identity makes you more lucrative as an advertising target. However a more recent driver of this change is the push for legal age verification. Newly minted laws in 25 U.S. states and at least a dozen countries demand that site operators verify the age of their users before displaying “inappropriate” content. While most of these laws were designed to tackle pornography, but (as many civil liberties folks warned) adult and adult-ajacent content is on almost any user-driven site. This means that age-verification checks are now popping up on social media websites, like Facebook, BlueSky, X and Discord and even encyclopedias aren’t safe: for example, Wikipedia is slowly losing its fight against the U.K.’s Online Safety Bill.

Whatever you think about age verification as a requirement, it’s apparent that routine ID checks will create a huge new privacy concern across the Internet. Increasingly, users of most sites will need to identify themselves, not by pseudonym but by actual government ID, just to use any basic site that might have user-generated content. If this is done poorly, this reveals a transcript of everything you do, all neatly tied to a real-world verifiable ID. While a few nations’ age-verification laws allow privacy-conscious sites to voluntarily discard the information once they’ve processed it, this has been far from uniform. Even if data minimization is allowed, advertising-supported sites will be an enormous financial incentive to retain real-world identity information, since the value of precise human identity is huge, and will only increase as non-monetizable AI-bots eat a larger share of these platforms.

The problem for today is: how do we live in a world with routine age-verification and human identification, without completely abandoning our privacy?

Anonymous credentials: authentication without identification

Back in the 1980s, a cryptographer named David Chaum caught a glimpse of our soon-to-be future, and he didn’t much like it. Long before the web or smartphones existed, Chaum recognized that users would need to routinely present (electronic) credentials to live their daily lives. He also saw that this would have enormous negative privacy implications. To address life in that world, he proposed a new idea: the anonymous credential.

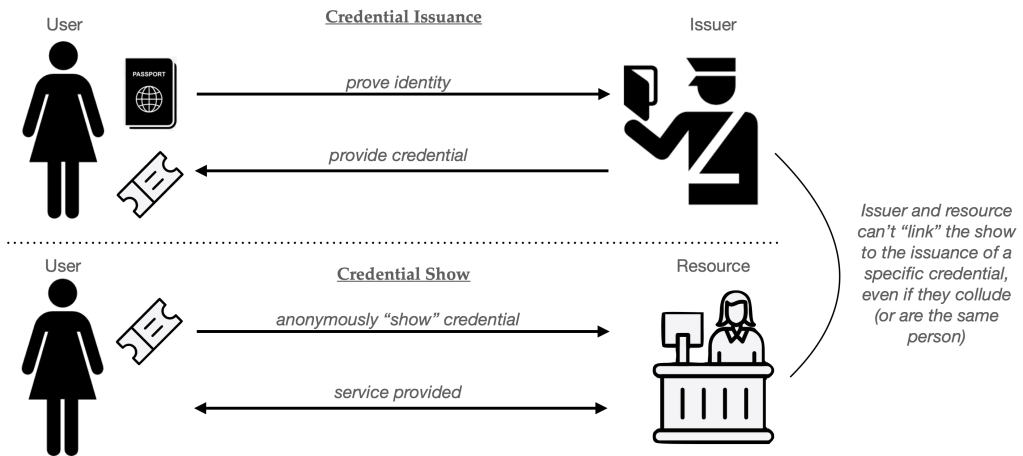

Let’s imagine a world where Alice needs to access some website or “Resource”. In a standard non-anonymous authentication flow, Alice needs to be granted authorization (a “credential”, such as a cookie) to do this. This grant can come either from the Resource itself (e.g., the website), or in other cases, from a third party (for example, Google’s SSO service.) For the moment we should assume that the preconditions for are not private: that is, Alice will presumably need to reveal something about her identity to the person who issues the credential. For example, she might use her credit card to pay for a subscription (e.g., for a news website), or she might hand over her driver’s license to prove that she’s an adult.

From a privacy perspective, the problem is that Alice will need to present her credential every time she wants to access that Resource. For example, each time she visits Wikipedia, she’ll need to hand over a credential that is tied to her real-world identity. A curious website (or an advertising network) can use this to precisely link her browsing history on the site to an actual human in the world. To a certain extent, this is the world we already live in today: advertising companies probably know a lot about who we are and what we’re browsing. What’s about to change in our future is that these online identities will increasingly be bound to our real-world government identity, so no more “Anonymous-User-38.”

Chaum’s idea was to break the linkage between the issuance and usage of a credential. This means that when Alice shows her credential to the website, all the site learns is that Alice has been given a valid credential. The site should not learn which issuance flow produced her the credential, which means it should not learn her exact ID; and this should hold even if the website colludes with (or literally is) the issuer of the credentials. The result is that, to the website, at least, Alice’s browsing can be unlinked from her identity. Imn other words, she can “hide” within the anonymity set of all users who obtained credentials.

One analogy I’ve seen for simple anonymous credentials is to think of them like a digital version of a “wristband”, the kind you might receive at the door of a club. In that situation, you show your ID to the person at the door, who then gives you an unlabeled wristband that indicates “this person is old enough to buy alcohol” or something along these lines. Although the doorperson sees your full ID, the bartender knows you only as the owner of a wristband. In principle your bar order (and your love of spam-based drinks) is untied somewhat from your name and address.

Why don’t we just give every user a copy of the same credential?

Before we get into the weeds of building anonymous credentials, it’s worth considering the obvious solution. What we want is simple: every user’s credential should be indistinguishable when “shown” to the resource. The obvious question is: why doesn’t the the issuer give a copy of the exact same exact credential to each user? In principle this solves all of the privacy problems, since every user’s “show” will literally be identical. (In fact, this is more or less the digital analog of the physical wristband approach.)

The problem here is that digital items are fundamentally different from physical ones. Real-world items like physical credentials (even cheap wristbands) are at least somewhat difficult to copy. A digital credential, on the other hand, can be duplicated effortlessly. Imagine a hacker breaks into your computer and steals a single credential: they can now make an unlimited number of copies and use them to power a basically infinite army of bot accounts, or sell them to underage minors, all of whom will appear to have valid credentials.

It’s worth pointing out that this eact same thing can happen with non-anonymous credentials (like usernames/passwords or session cookies) as well. However, there’s a difference. In the non-anonymous setting, credential cloning and other similar abuse can be detected, at least in principle. Websites routinely monitor for patterns that indicate the use of stolen credentials: for example, many will flag when they see a single “user” showing up too frequently, or from different and unlikely parts of the world, a procedure that’s sometimes called continuous authentication. Unfortunately, the anonymity properties of anonymous credentials render such checks mostly useless, since every credential “show” is totally anonymous, and we have no idea which user is actually presenting.

To address these threats, any real-world useful anonymous credential system has to have some mechanism to limit credential duplication. The most basic approach is to provide users with credentials that are limited in some fashion. There are a few different approaches to this:

- Single-use (or limited-usage) credentials. The most common approach is to issue credentials that allow the user to log in (“show” the credential) exactly one time. If a user wants to access the website fifty times, then she needs to obtain fifty separate credentials from the Issuer. A hacker can still steal these credentials, but they’ll also be limited to only a bounded number of website accesses. This approach is used by credentials like PrivacyPass, which is used by sites like CloudFlare.

- Revocable credentials. Another approach is to build credentials that can be revoked in the event of bad behavior. This requires a procedure such that when a particular anonymous user does something bad (posts spam, runs a DOS attack against a website) you can revoke that specific user’s credential — blocking future usage of it, without otherwise learning who they are.

- Hardware-tied credentials. Some real-world proposals like Google’s approach instead “bind” credentials to a piece of hardware, such as the trusted platform module in your phone. This makes credential theft harder — a hacker will need to “crack” the hardware to clone the credentials. But a successful theft still has big consequences that can undermine the security of the whole system.

The anonymous credential literature is filled with variants of the above approaches, sometimes combinations of the three. In every case, the goal is to put some barriers in the way of credential cloning.

Building a single-use credential

With these warnings in mind, we’re now ready to talk about how anonymous credentials are actually constructed. We’re going to discuss two different paradigms, which sometimes mix together to produce more interesting combinations.

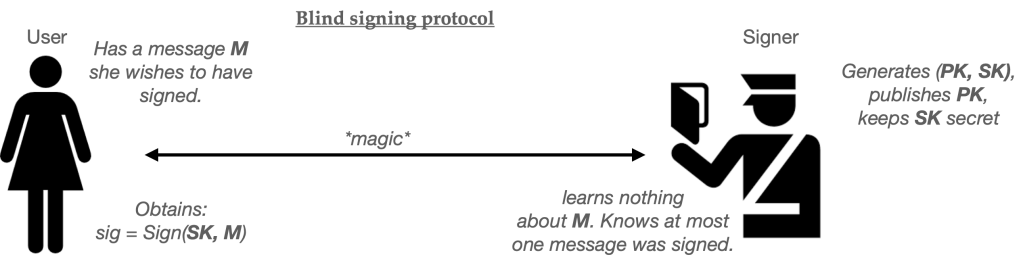

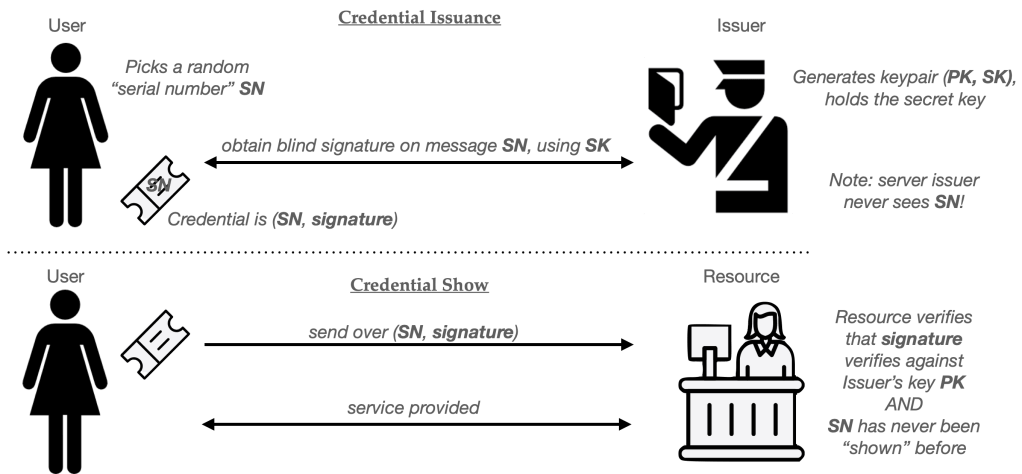

Chaum’s original constructions produce single-use credentials, based on a primitive known as a blind signature scheme. Blind signatures are a variant of digital signatures; they feature an additional protocol that allows for “blind signing”. In this flow, a User has a message they want to have signed, while the Server holds the secret half of a public/secret signing keypair. The two parties run an interactive protocol, at the end of which the User obtains a signature on their message. The server knows that it signed (exactly one) message, but critically, the server does not learn the value of the message that it signed.

For the purposes of this post, we won’t spend too much time thinking about how blind signatures are constructed, at least not for this post (though some more details are here, if you’re interested.) For now, let’s assume we’ve been handed a working blind signature scheme. Using this scheme as our base ingredient, we can build a basic single-use anonymous credential as follows:

- First, the Issuer generates a signing keypair (PK, SK) and gives out the key PK to everyone who might wish to verify its signatures.

- When the User wishes to obtain a credential, she randomly selects a new serial number SN. This random string should be long enough that it’s highly unlikely to repeat, assuming it’s chosen honestly.

- The User and Issuer now run the blind signing protocol described above. In this case, the User sets its message to SN and the Issuer employs its signing key SK. At the completion of this protocol, the user should hold a valid signature under the Issuer’s key computed over the message “SN”. The pair (SN, signature) form the User’s credential.

To “show” the credential to some Resource, the User simply needs to hand over the pair (SN, signature). Assuming the Resource knows the public key (PK) of the issuer, it can verify that (1) the signature is valid on SN, and (2) nobody has every used that value SN in some previous credential “show”.

If there is exactly one Resource (website) consuming these credentials, then serial number checking can be conducted locally, using a simple database of past SN values seen. This gets a bit messier if there are many Resources (say different websites) that credentials can be used at. A typical solution is to outsource serial number checks to some centralized service (or “bulletin board”) which helps to prevent a user from re-using a single credential across many different sites.

Here’s the whole protocol in helpful pictograms:

Chaumian credentials are now about forty years old and still work well, provided your Issuer is willing to bear the cost of running the blind signature protocol for each credential it issues — and that the Resource doesn’t mind verifying a signature for each “show”. Protocols like PrivacyPass implement this using protocols like blind RSA signatures, so presumably these operations cost isn’t prohibitive for real-world applications. However, PrivacyPass also includes some speed optimizations for cases where the Issuer and Resource are the same entity, and these make a big difference.1

Single-use credentials work great, but have some drawbacks. The big ones are (1) efficiency, and (2) lack of expressiveness.

The efficiency problem becomes obvious when you consider a user who accesses a website site many times. For example, imagine using an anonymous credential to replace Google’s session cookies. For most users, this require obtaining and delivering thousands of single-use credentials every single day. You might mitigate this problem by using credentials only for the first registration to a website, after which you can trade your credential for a pseudonym (such as a random username or a normal session cookie) for later accesses. But the downside of this is that all of your subsequent site accesses would be linkable, which is a bit of a privacy tradeoff.

The expressiveness objection is a bit more complicated. Let’s talk about that next.

Let’s be expressive!

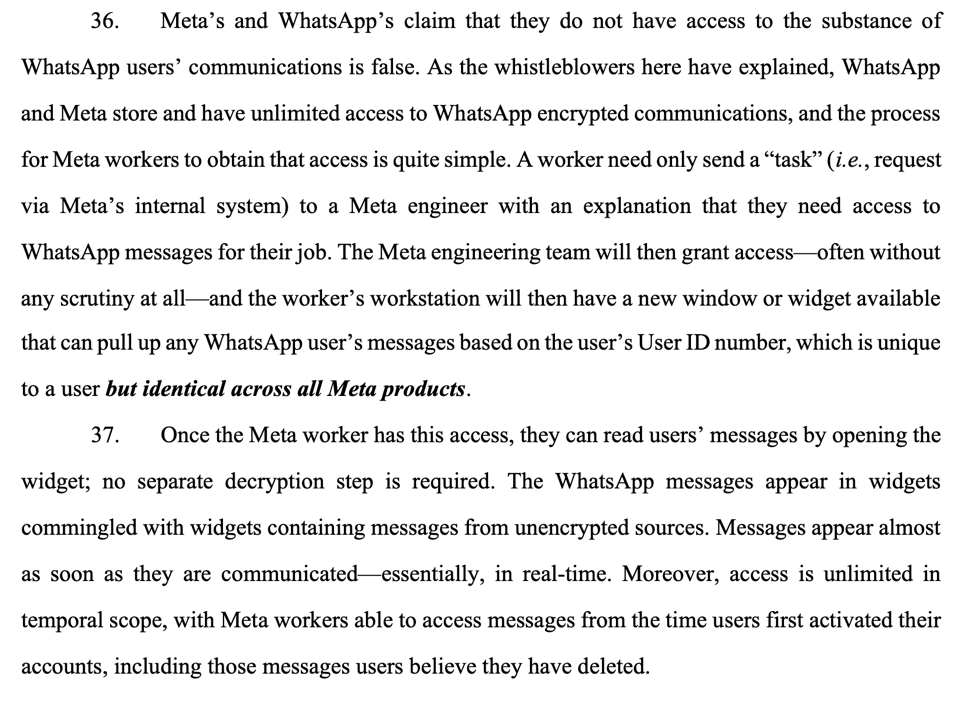

Simple Chaumian credentials have a more fundamental limitation: they don’t carry much information.

Consider our bartender in a hypothetical wristband-issuing club. When I show up at the door, I provide my ID and get a wristband that shows I’m over 21. The wristband “credential” carries “one bit” of information: namely, the fact that you’re older than some arbitrary age constant.

Sometimes we want to do prove more interesting things with a digital credential. For example, imagine that I want to join a cryptocurrency exchange that needs more complicated assurances about my identity. For example: it might require that I’m a US resident, but not a resident of New York State (which has its own regulations.) The site might also demand that I’m over the age of 25. (I am literally making these requirements up as I go.) I could satisfy the website on all these fronts using the digitally-signed driver’s license issued by my state’s DMV. This is a real thing! It consists of a signed and structured document full of all sorts of useful information: my home address, state of issue, eye color, birthplace, height, weight, hair color and gender. In this world, the non-anonymous solution is easy: I just hand over my digitally-signed license and the website verifies the properties it needs in the various fields.

The downside to handing over my driver’s license is that doing so means I also leak much more information than the site requires. For example, this creepy website will also learn my home address, which it might use it to send me junk mail! I’d really prefer it didn’t. A much better solution would allow me to assure the website only abiout the specific facts it cares about. I could remain anonymous otherwise.

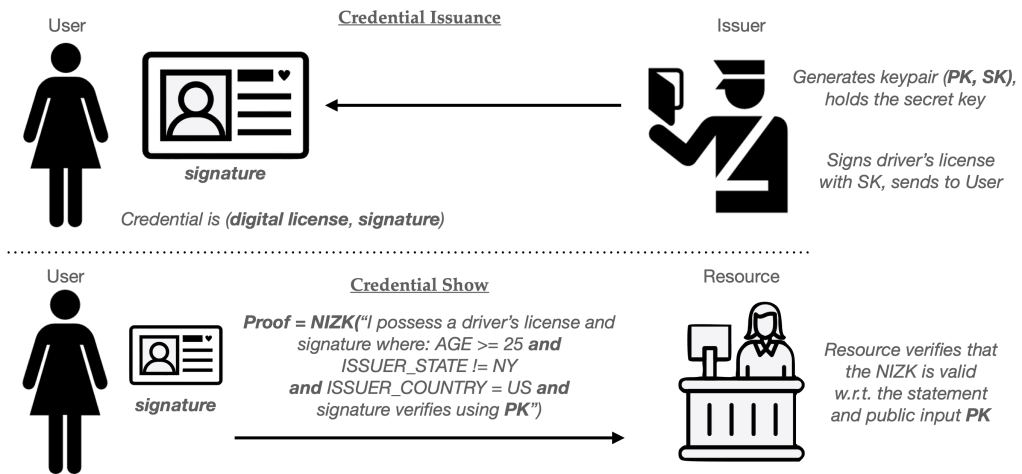

For example, all I really want to prove can be summarized in the following four bullet points:

- BIRTHDATE <= (TODAY – 25 years)

- ISSUE_STATE != NY

- ISSUE_COUNTRY = US

- SIGNATURE = (some valid signature that verifies under a known state DMV public key).

I could outsource these checks to some Issuer, and have them issue me a single-use credential that claims to verify all these facts. But this is annoying, especially if I already have the signed license.

A different way to accomplish this is to use zero-knowledge (ZK) proofs. A ZK proof allows me to prove that I know some secret value that satisfies various constraints. For example, I could use a ZK proof to “prove” to some Resource that I have a signed, structured driver’s license credential. I could further use the proof to demonstrate that the value in each fields referenced above satisfies the constraints listed above. The neat thing about using a ZK proofs to make this claim is that my “proof” should be entirely convincing to the website, yet will reveal nothing at all beyond the fact that these claims are true.

A variant of the ZK proof, called the non-interactive zero-knowledge proof (NIZK) lets me do this in a single message from User to Issuer. Using this tool, I can build a credential system as follows:

(These techniques are very powerful. Not only can I change the constraints I’m proving on demand, but I can also perform proofs that reference multiple different credentials at the same time. For example, I might prove that I have a driver’s license, and also that by digitally-signed credit report indicates that I have a credit rating over 700.)

The ZK-proof approach also addresses the efficiency limitation of the basic single-use credential: here the same credential can be re-used to power many “show” protocol runs, without allowing websites to link any credential show to the others. This property stems from the fact that ZK proofs are normally randomized, and each “proof” should reveal no other information about the user, even the fact that it is the same user.2

Of course, there are downsides to this re-usability as well, as we’ll discuss in the next section.

How to win the clone wars

We’ve argued that the zero-knowledge paradigm has two advantages over simple Chaumian credentials. First, it’s potentially much more expressive. Second, it allows a User to re-use a single credential many times without needing to constantly retrieve new single-use credentials from the Issuer. While that’s very convenient, it raises a concern we already discussed: what happens if a hacker steals one of these re-usable credentials?

This is catastrophic for anonymous credential systems, since a single stolen credential anywhere means that the guarantees of the global system become useless. Even worse, the “hacker” in this case might be one of your legitimate users!

As mentioned earlier, one approach to solving this problem is to simply make credential theft very, very hard. This is the optimistic approach proposed in Google’s new anonymous credential scheme. Here, credentials will be tied to a key stored within the “secure element” in your phone, which theoretically makes them hard to duplicate onto a different phone. The problem here is that there are hundreds of millions of phones in the world, and the Secure Element technology in them runs the gamut from “very good” (for high-end, flagship phones) to “modestly garbage” (for the cheap burner Android phone you can buy at Target.) Unfortunately, anonymous credential systems can be fragile — meaning that a failure in any of those phones potentially compromises the integrity of the whole system.

An alternative approach is to limits the usefulness of any given credential, much as we did with our single-use credentials above. Once you have ZK proofs in place, there are many ways to do this.

One clever approach is to place an upper bound on the number of times that a ZK credential can be used. For example, we might wish to ensure that a credential can be “shown” at most N times before it expires. This is analogous to extracting many different single-use credentials, without the hassle of having to make the Issuer do quite as much work.

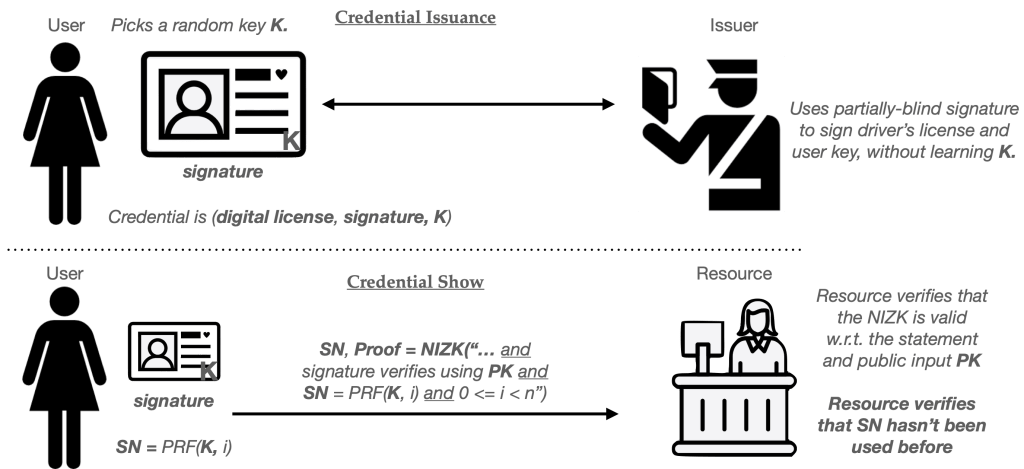

We can modify our ZK credential to support a limit of N shows as follows. First, let’s have the User select a random key K for a pseudorandom function (PRF). This function is somewhat like a good hash function: it’s a deterministic function that takes in a key and an arbitrary “message”, then blends them to produce a random-looking output that should be unlikely to repeat, provided the same message is not re-evaluated. We’ll embed the key K into the credential and have the issuer sign it. (It’s important that the Issuer does not learn K, so this often requires that the credential be signed using a blind, or partially-blind, signing protocol.3) We’ll now use this key and PRF to generate unique serial numbers, one for each time we “show” the credential.

Concretely, the ith time we “Show” the credential, we’ll generate the following “serial number”:

SN = PRF(K, i)

Once the User has computed SN for a particular show, it will send this serial number to the Resource along with the zero-knowledge proof. The ZK proof will, in turn, be modified to include two additional clauses:

- A proof that SN = PRF(K, i), for some value i, and the value K that’s stored within the signed credential.

- A proof that 0 <= i < N.

Notice that these “serial numbers” are very similar to the ones we embedded in the single-use credentials above. Each Resource (website) can keep a list of each SN value that it sees, and sites can reject any “show” that repeats a serial number. As long as the User never repeats a counter (and the PRF output is long enough), serial numbers should be unlikely to repeat. However, repetition becomes inevitable if the User ever “cheats” and tries to show the same credential N+1 times.

This approach can be constructed in many variants. For example, with some simple tweaks, can build credentials that only permit the User to employ the credential a limited number of times in any given time period: for example, at most 100 times per day.4 This requires us to simply change the inputs to the PRF function, so that they include a time period (for example, the date) as well as a counter. These techniques are described in a great paper whose title I’ve stolen for this section.

Expiring and revoking credentials

The power of the ZK approach gives us many other tools to limit the power of credentials. For example, it’s relatively easy to add expiration dates to credentials, which will implicitly limit their useful lifespan — and hopefully reduce the probability that one gets stolen. To do this, we simply add a new field (e.g., Expiration_Time) that specifies a timestamp at which the credential should expire.

When a user “shows” the credential, they can first check their clock for the current time T, and they can add the following clause to their ZK proof:

T < Expiration_Time

Revoking credentials is a bit more complicated.

One of the most important countermeasures against credential abuse is the ability to ban users who behave badly. This sort of revocation happens all the time on real sites: for example, when a user posts spam on a website, or abuses the site’s terms of service. Yet implementing revocation with anonymous credentials seems implicitly difficult. In a non-anonymous credential system we simply identify the user and add them to a banlist. But anonymous credential users are anonymous! How do you ban a user who doesn’t have to identify themselves?

That doesn’t mean that revocation is impossible. In fact, there are several clever tricks for banning credentials in the zero-knowledge credential setting.

Imagine we’re using a basic signed credential like the one we’ve previously discussed. As in the constructions above, we’re going to ensure that the User picks a secret key K to embed within the signed credential.5 As before, the key K will powers a pseudorandom function (PRF) that can make pseudorandom “serial numbers” based on some input.

For the moment, let’s assume that the site’s “banlist” is empty. When a user goes to authenticate itself, the User and website interact as follows:

- First, the website will generate a unique/random “basename” bsn that it sends to the User. This is different for every credential show, meaning that no two interactions should ever repeat a basename.

- The user next computes SN = PRF(K, bsn) and sends SN to the Resource, along with a zero-knowledge proof that SN was computed correctly.

If the user does nothing harmful, the website delivers the requested service and nothing further happens. However, if the User abuses the site, the Resource will now ban the user by adding the pair (bsn, SN) to the banlist.

Now that the banlist is non-empty, we require an additional step occur every time a user shows their credential: specifically, the User must prove to the website that they aren’t on the list. Doing this requires the User to enumerate every pair (bsni, SNi) on the banlist, and prove that for each one, the following statement is true:

SNi ≠ PRF(K, bsni), using the User’s key K.

Naturally this approach requires a bit more work on the User’s part: if there are M users on the banned list, then every User must do about M extra pieces of work when Showing their credential, which hopefully means that the number of banned users stays small.

Up next: what do real-world credential systems look like?

So far we’ve just dipped our toes into the techniques that we can use for building anonymous credentials. This tour has been extremely shallow: we haven’t talked about how to build any of the pieces we need to make them work. We also haven’t addressed tough real-world questions like: where are these digital identity certificates coming from, and what do we actually use them for?

In the next part of the piece I’m going to try to make this all much more concrete, by looking at two real-world examples: PrivacyPass, and a brand-new proposal from Google to tie anonymous credentials to your driver’s license on Android phones.

(To be continued)

Headline image: Islington Education Library Service

Notes:

- PrivacyPass has two separate issuance protocols. One uses blind RSA signatures, which are more or less an exact mapping to the protocol we described above. The second one replaces the signature with a special kind of MAC scheme, which is built from an elliptic-curve OPRF scheme. MACs work very similarly to signatures, but require the secret key for verification. Hence, this version of PrivacyPass really only works in cases where the Resource and the Issuer are the same person, or where the Resource is willing to outsource verification of credentials to the Issuer.

- This is a normal property of zero-knowledge proofs, namely that any given “proof” should reveal nothing about the information proven on. In most settings this extends to even alowing the ability to link proofs to a specific piece of secret input you’re proving over, which is called a witness.

- A blind signature ensures that the server never learns which message it’s signing. A partially-blind signature protocol allows the server to see a part of the message, but hides another part. For example, a partially-blind signature protocol might allow the server to see the driver’s license data that it’s signing, but not learn the value K that’s being embedded within a specific part of the credential. A second way to accomplish this is for the User to simply commit to K (e.g., compute a hash of K), and store this value within the credential. The ZK statement would then be modified to prove: “I know some value K that opens the commitment stored in my credential.” This is pretty deep in the weeds.

- In more detail, imagine that the User and Resource both know that the date is “December 4, 2026”. Then we can compute the serial number as follows:

SN = PRF(K, date || i)

As long we keep the restriction that 0 <= i < N (and we update the other ZK clauses appropriately, so they ensure the right date is included in this input), this approach allows us to use N different counter values (i) within each day. Once both parties increment the date value, we should get an entirely new set of N counter values. Days can be swapped for hours, or even shorter periods, provided that both parties have good clocks. - In real systems we do need to be a bit careful to ensure that the key K is chosen honestly and at random, to avoid a user duplicating another user’s key or doing something tricky. Often real-world issuance protocols will have K chosen jointly by the Issuer and User, but this is a bit too technically deep for a blog post.