It’s not every day that I wake up thinking about how people back up their web browsers. Mostly this is because I don’t feel the need to back up any aspect of my browsing. Some people lovingly maintain huge libraries of bookmarks and use fancy online services to organize them. I pay for one of those because I aspire to be that kind of person, but I’ve never been organized enough to use it.

In fact, the only thing I want from my browser is for my history to please go away, preferably as quickly as possible. My browser is a part of my brain, and backing my thoughts up to a cloud provider is the most invasive thing I can imagine. Plus, I’m constantly imagining how I’ll explain specific searches to the FBI.

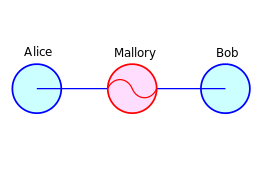

All of these thoughts are apropos a Twitter thread I saw last night from the Engineering Director on Chrome Security & Privacy at Google, which explains why “browser sync” features (across several platforms) can’t provide end-to-end encryption by default.

This thread sent me down a rabbit hole that ended in a series of highly-scientific Twitter polls and frantic scouring of various providers’ documentation. Because while on the one hand Justin’s statement is mostly true, it’s also a bit wrong. Specifically, I learned that Apple really seems to have solved this problem. More interestingly, the specific way that Apple has addressed this problem highlights some strange assumptions that make this whole area unnecessarily messy.

This munging of expectations also helps to explain why “browser sync” features and the related security tradeoffs seem so alien and horrible to me, while other folks think these are an absolute necessity for survival.

Let’s start with the basics.

What is cloud-based browser “sync”, and how secure is it?

Most web browsers (and operating systems with a built-in browser) incorporate some means of “synchronizing” browsing history and bookmarks. By starting with this terminology we’ve already put ourselves on the back foot, since “synchronize” munges together three slightly different concepts:

- Synchronizing content across devices. Where, for example you have a phone, a laptop and a tablet all active and in occasional use and want your data to propagate from one to the others.

- Backing up your content. Wherein you lose all your device(s) and need to recover this data onto a fresh clean device.

- Logging into random computers. If you switch computers regularly (for example, back when we worked in offices) then you might want to be able to quickly download your data from the cloud.

(Note that the third case is kind of weird. It might be a subcase of #1 if you have another device that’s active and can send you the data. It might be a subcase of #2. I hate this one and am sending it to live on a farm upstate.)

You might ask why I call these concepts “very different” when they all seem quite similar. The answer is that I’m thinking about a very specific question: namely, how hard is it to end-to-end encrypt this data so that the cloud provider can’t read it? The answer is different between (at least) the first two cases.

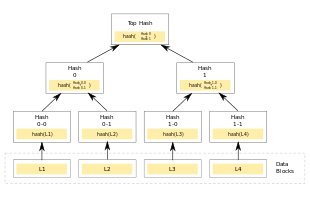

If what we really want to do is synchronize your data across many active devices, then the crypto problem is relatively easy. The devices generate public keys and register them with your cloud provider, and then each one simply encrypts relevant content to the others. Apple has (I believe) begun to implement this across their device ecosystem.

If what we want is cloud backup, however, then the problem is much more challenging. Since the base assumption is that the device(s) might get lost, we can’t store decryption keys there. We could encrypt the data under the user’s device passcode or something, but most users choose terrible passcodes that are trivially subject to dictionary attacks. Services like Apple iCloud and Google (Android) have begun to deploy trusted hardware in their data centers to mitigate this: these “Hardware Security Modules” (HSMs) store encryption keys for each user, and only allow a limited number of password guesses before they wipe the keys forever. This keeps providers and hackers out of your stuff. Yay!

Except: not yay! Because, as Justin points out (and here I’m paraphrasing in my own words) users are the absolute worst. Not only do they choose lousy passcodes, but they constantly forget them. And when they forget their passcode and can’t get their backups, do they blame themselves? Of course not! They blame Justin. Or rather, they complain loudly to their cloud backup providers.

While this might sound like an extreme characterization, remember: when you have a billion users, the extreme ones will show up quite a bit.

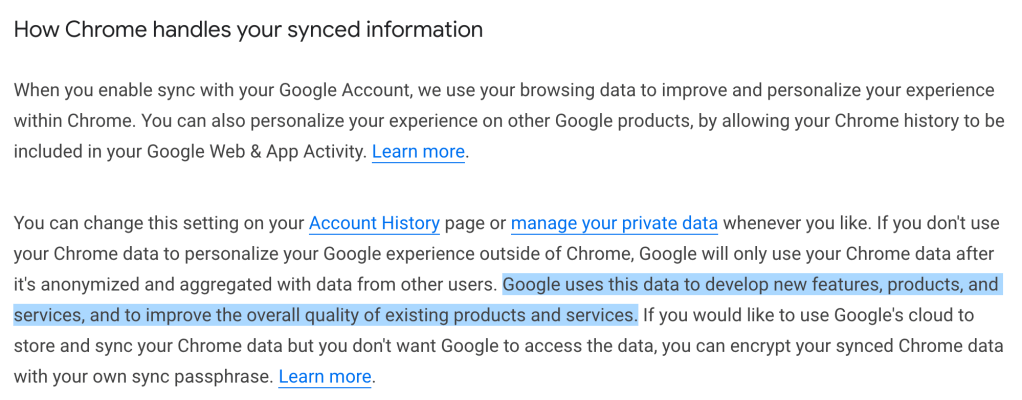

The consequence of this, argues Justin, is that most cloud backup services don’t use default end-to-end encryption for browser synchronization, and hence your bookmarks and in this case your browsing history will be stored at your provider in plaintext. Justin’s point is that this decision flows from the typical user’s expectations and is not something providers have much discretion about.

And if that means your browsing history happens to get data-mined, well: the spice must flow.

Except none of this is quite true, thanks to Apple!

The interesting thing about this explanation is that it’s not quite true. I was inclined to believe this explanation, until I went spelunking through the Apple iCloud security docs and found that Apple does things slightly differently.

(Note that I don’t mean to blame Justin for not knowing this. The problem here is that Apple absolutely sucks at communicating their security features to an audience that isn’t obsessed with reading their technical documentation. My students and I happen to be obsessive, and sometimes it pays dividends.)

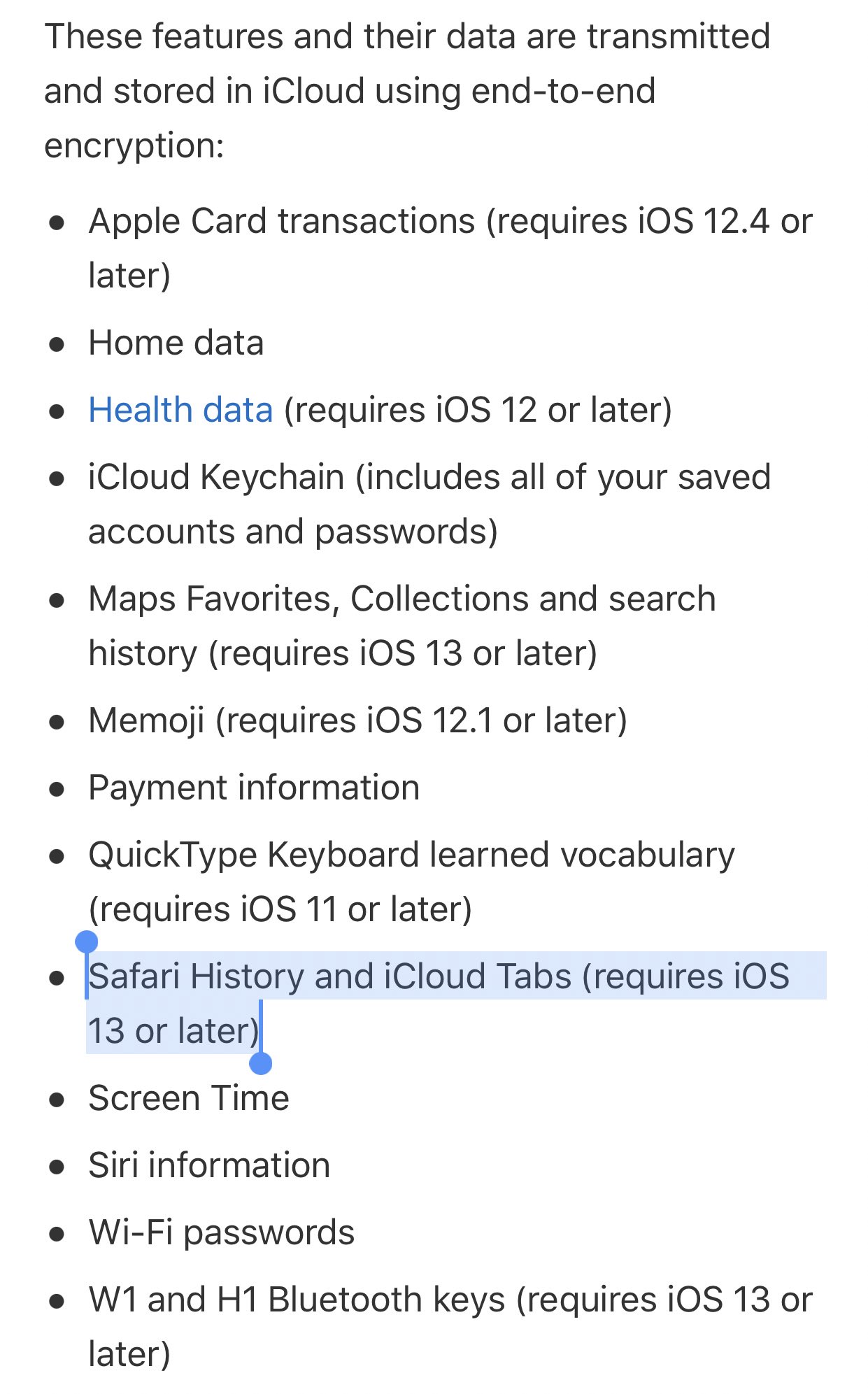

What I learned from my exploration (and here I pray the documentation is accurate) is that Apple actually does seem to provide end-to-end encryption for browser data. Or more specifically: they provide end-to-end encryption for browser history data starting as of iOS 13.

More concretely, Apple claims that this data is protected “with a passcode”, and that “nobody else but you can read this data.” Presumably this means Apple is using their iCloud Keychain HSMs to store the necessary keys, in a way that Apple itself can’t access.

What’s interesting about the Apple decision is that it appears to explicitly separate browsing history and bookmarks, rather than lumping them into a single take-it-or-leave-it package. Apple doesn’t claim to provide any end-to-end encryption guarantees whatsoever for bookmarks: presumably someone who resets your iCloud account password can get those. But your browsing history is protected in a way that even Apple won’t be able to access, in case the FBI show up with a subpoena.

That seems like a big deal and I’m surprised that it’s gotten so little attention.

Why should browser history be lumped together with bookmarks?

This question gets at the heart of why I think browser synchronization is such an alien concept. From my perspective, browsing history is an incredibly sensitive and personal thing that I don’t want anywhere. Bookmarks, if I actually used them, would be the sort of thing I’d want to preserve.

I can see the case for keeping history on my local devices. It makes autocomplete faster, and it’s nice to find that page I browsed yesterday. I can see the case for (securely) synchronizing history across my active devices. But backing it up to the cloud in case my devices all get stolen? Come on. This is like the difference between backing up my photo library, and attaching a GoPro to my head while I’m using the bathroom.

(And Google’s “sync” services only stores 90 days of history, so it isn’t even a long-term backup.)

One cynical answer to this question is: these two very different forms of data are lumped together because one of them — browser history — is extremely valuable for advertising companies. The other one is valuable to consumers. So lumping them together gets consumers to hand over the sweet, sweet data in exchange for something they want. This might sound critical, but on the other hand, we’re just describing the financial incentive that we know drives most of today’s Internet.

A less cynical answer is that consumers really want to preserve their browsing history. When I asked on Twitter, a bunch of tech folks noted that they use their browsing history as an ad-hoc bookmarking system. This all seemed to make some sense, and so maybe there’s just something I don’t get about browser history.

However, the important thing to keep in mind here is that just because you do this doesn’t mean it should drive a few billion people’s security posture. The implications of prioritizing the availability of browser history backups (as a default) is that vast numbers of people will essentially have their entire history uploaded to the cloud, where it can be accessed by hackers, police and surveillance agencies.

Apple seems to have made a different calculation: not that history isn’t valuable, but that it isn’t a good idea to hold the detailed browser history of a billion human beings in a place where any two-bit police agency or hacker can access it. I have a very hard time faulting them in that.

And if that means a few users get upset, that seems like a good tradeoff to me.

.jpg/220px-Richard_Stallman_2005_(chrys).jpg)

about the title above, I ripped it off from an old Belle Waring

about the title above, I ripped it off from an old Belle Waring

hacking group LulzSec. We now know that these arrests were facilitated by ‘Anonymous’ leader* “

hacking group LulzSec. We now know that these arrests were facilitated by ‘Anonymous’ leader* “