A few months ago it was starting to seem like you couldn’t go a week without a new attack on TLS. In that context, this summer has been a blessed relief. Sadly, it looks like our vacation is over, and it’s time to go back to school.

on TLS. In that context, this summer has been a blessed relief. Sadly, it looks like our vacation is over, and it’s time to go back to school.

Today brings the news that Karthikeyan Bhargavan and Gaëtan Leurent out of INRIA have a new paper that demonstrates a practical attack on legacy ciphersuites in TLS (it’s called “Sweet32”, website here). What they show is that ciphersuites that use 64-bit blocklength ciphers — notably 3DES — are vulnerable to plaintext recovery attacks that work even if the attacker cannot recover the encryption key.

While the principles behind this attack are well known, there’s always a difference between attacks in principle and attacks in practice. What this paper shows is that we really need to start paying attention to the practice.

So what’s the matter with 64-bit block ciphers?

Block ciphers are one of the most widely-used cryptographic primitives. As the name implies, these are schemes designed to encipher data in blocks, rather than a single bit at a time.

implies, these are schemes designed to encipher data in blocks, rather than a single bit at a time.

The two main parameters that define a block cipher are its block size (the number of bits it processes in one go), and its key size. The two parameters need not be related. So for example, DES has a 56-bit key and a 64-bit block. Whereas 3DES (which is built from DES) can use up to a 168-bit key and yet still has the same 64-bit block. More recent ciphers have opted for both larger blocks and larger keys.

When it comes to the security provided by a block cipher, the most important parameter is generally the key size. A cipher like DES, with its tiny 56-bit key, is trivially vulnerable to brute force attacks that attempt decryption with every possible key (often using specialized hardware). A cipher like AES or 3DES is generally not vulnerable to this sort of attack, since the keys are much longer.

However, as they say: key size is not everything. Sometimes the block size matters too.

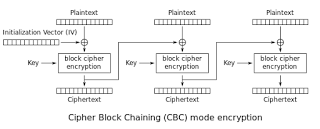

You see, in practice, we often need to encrypt messages that are longer than a single block. We also tend to want our encryption to be randomized. To accomplish this, most protocols use a block cipher in a scheme called a mode of operation. The most popular mode used in TLS is CBC mode. Encryption in CBC looks like this:

The nice thing about CBC is that (leaving aside authentication issues) it can be proven (semantically) secure if we make various assumptions about the security of the underlying block cipher. Yet these security proofs have one important requirement. Namely, the attacker must not receive too much data encrypted with a single key.

The reason for this can be illustrated via the following simple attack.

Imagine that an honest encryptor is encrypting a bunch of messages using CBC mode. Following the diagram above, this involves selecting a random Initialization Vector () of size equal to the block size of the cipher, then XORing

with the first plaintext block (

), and enciphering the result (

). The

is sent (in the clear) along with the ciphertext.

Most of the time, the resulting ciphertext block will be unique — that is, it won’t match any previous ciphertext block that an attacker may have seen. However, if the encryptor processes enough messages, sooner or later the attacker will see a collision. That is, it will see a ciphertext block that is the same as some previous ciphertext block. Since the cipher is deterministic, this means the cipher’s input () must be identical to the cipher’s previous input

that created the previous block.

In other words, we have , which can be rearranged as

. Since the IVs are random and known to the attacker, the attacker has (with high probability) learned the XOR of two (unknown) plaintexts!

What can you do with the XOR of two unknown plaintexts? Well, if you happen to know one of those two plaintext blocks — as you might if you were able to choose some of the plaintexts the encryptor was processing — then you can easily recover the other plaintext. Alternatively, there are known techniques that can sometimes recover useful data even when you don’t know both blocks.

The main lesson here is that this entire mess only occurs if the attacker sees a collision. And the probability of such a collision is entirely dependent on the size of the cipher block. Worse, thanks to the (non-intuitive) nature of the birthday bound, this happens much more quickly than you might think it would. Roughly speaking, if the cipher block is b bits long, then we should expect a collision after roughly encrypted blocks.

In the case of a 64-bit blocksize cipher like 3DES, this is somewhere in the vicinity of , or around 4 billion enciphered blocks.

(As a note, the collision does not really need to occur in the first block. Since all blocks in CBC are calculated in the same way, it could be a collision anywhere within the messages.)

Whew. I thought this was a practical attack. 4 billion is a big number!

It’s true that 4 billion blocks seems like an awfully large number. In a practical attack, the requirements would be even larger — since the most efficient attack is for the attacker to know a lot of the plaintexts, in the hope that she will be able to recover one unknown plaintext when she learns the value (P ⊕ P’).

However, it’s worth keeping in mind that these traffic numbers aren’t absurd for TLS. In practice, 4 billion 3DES blocks works out to 32GB of raw ciphertext. A lot to be sure, but not impossible. If, as the Sweet32 authors do, we assume that half of the plaintext blocks are known to the attacker, we’d need to increase the amount of ciphertext to about 64GB. This is a lot, but not impossible.

The Sweet32 authors take this one step further. They imagine that the ciphertext consists of many HTTPS connections, consisting of 512 bytes of plaintext, in each of which is embedded the same secret 8-byte cookie — and the rest of the session plaintext is known. Calculating from these values, they obtain a requirement of approximately 256GB of ciphertext needed to recover the cookie with high probability.

That is really a lot.

But keep in mind that TLS connections are being used to encipher increasingly more data. Moreover, a single open browser frame running attacker-controlled Javascript can produce many gigabytes of ciphertext in a single hour. So these attacks are not outside of the realm of what we can run today, and presumably will be very feasible in the future.

How does the TLS attack work?

While the cryptographic community has been largely pushing TLS away from ciphersuites like CBC, in favor of modern authenticated modes of operation, these modes still exist in TLS. And they exist not only for use not only with modern ciphers like AES, but they are often available for older ciphersuites like 3DES. For example, here’s a connection I just made to Google:

Of course, just because a server supports 3DES does not mean that it’s vulnerable to this attack. In order for a particular connection to be vulnerable, both the client and server must satisfy three main requirements:

- The client and server must negotiate a 64-bit cipher. This is a relatively rare occurrence, but can happen in cases where one of the two sides is using an out-of-date client. For example, stock Windows XP does not support any of the AES-based ciphersuites. Similarly, SSL3 connections may negotiate 3DES ciphersuites.

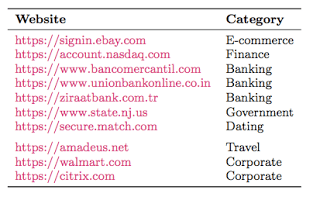

- The server and client must support long-lived TLS sessions, i.e., encrypting a great deal of data with the same key. Unfortunately, most web browsers place no limit on the length of an HTTPS session if Keep-Alive is used, provided that the server allows the session. The Sweet32 authors scanned and discovered that many servers (including IIS) will allow sessions long enough to run their attack. Across the Internet, the percentage of vulnerable servers is small (less than 1%), but includes some important sites.

- The client must encipher a great deal of known data, including a secret session cookie. This is generally achieved by running adversarial Javascript code in the browser, although it could be done using standard HTML as well.

Sites vulnerable to Sweet32. (source)

These caveats aside, the authors were able to run their attack using Firefox, sending at a rate of about 1500 connections per second. With a few optimizations, they were able to recover a 16-byte secret cookie in about 30 hours (a lucky result, given an expected 38 hour run time).The client must encipher a great deal of known data, including a secret session cookie. This is generally achieved by running adversarial Javascript code in the browser, although it could be done using standard HTML as well.

So what do we do now?

While this is not an earthshaking result, it’s roughly comparable to previous results we’ve seen with legacy ciphers like RC4.

In short, while these are not the easiest attacks to run, it’s a big problem that there even exist semi-practical attacks that undo the encryption used in standard encryption protocols. This is a problem that we should address, and these attack papers help to make those problems more clear.

I have some questions:

1. I realized the answer to my former question 1 after writing this, so instead: what is your favorite flavor of ice cream?

2. I was under the impression that an SSL connection periodically generates new symmetric keys and new temporary public key pairs as well used to send the symmetric keys. Is this not the case?

3. Don’t you only retrieve the data in the collision blocks? In others words, just 8 bytes of data.

4. Isn’t it impossible to control where in the ciphertext the collision occurs?

Given 3 & 4, as well as the need to have a session transmit hundreds of gigs without the user or program terminating it, this seems very impractical. Don’t get me wrong, I’m all for switching cipher modes when there is an even minutely “semi-practical” break (such as the case here), but I do this it’s unlikely this will be put to practical use. If the goal is something like getting a cookie, there have been various vulnerabilities over the years that make getting it or using it without knowing it (eg XSS attacks) much more practical.

I do appreciate the post though. It was very interesting. I just came across your blog linked from wikipedia on the nsa backdoored EC prng and may follow it more in the future.

I have a followup to my previous questions. Excuse me as I’m about to go to bed and am half awake here. I think I may have realized the answer to another of my questions, but since my comment hasn’t been approved yet, I can’t see it. If you see one asking about needing a collision to happen in the exact same spot, ignore it, since I realized that doesn’t matter because the calculation is for a single “chosen” specific location.

Anyway, I’m not so sure about their claim that “many” websites allow a “very large” number of connections. Even with IIS (ungh), in their test they ran for over 2 days straight, so I’d have imagine either due to sheer volume of requests or raw bandwidth usage from one ip, either a web or network admin would notice, for any website worth its salt. I also wonder if they throttled/limited their bandwidth in the rest to be more realistic. I almost forgot, but some isp monthly usage limits (double ungh) are close to that, too.

What is minimum attack complexity in solving following system of equation,

[-920 = [1 -1 -1 1 [x1]

960 1 -1 1 -1] x2

x3

x4]

where (x1,x2,x3,x4) is the correct possible combination of (310,20,1250,40)?