At Monday’s WWDC conference, Apple announced a cool new feature called “Find My”. Unlike Apple’s “Find my iPhone“, which uses cellular communication and the lost device’s own GPS to identify the location of a missing phone, “Find My” also lets you find devices that don’t have cellular support or internal GPS — things like laptops, or (and Apple has hinted at this only broadly) even “dumb” location tags that you can attach to your non-electronic physical belongings.

The idea of the new system is to turn Apple’s existing network of iPhones into a massive crowdsourced location tracking system. Every active iPhone will continuously monitor for BLE beacon messages that might be coming from a lost device. When it picks up one of these signals, the participating phone tags the data with its own current GPS location; then it sends the whole package up to Apple’s servers. This will be great for people like me, who are constantly losing their stuff: if I leave my backpack on a tour bus in China in my office, sooner or later someone else will stumble on its signal and I’ll instantly know where to find it.

(It’s worth mentioning that Apple didn’t invent this idea. In fact, companies like Tile have been doing this for quite a while. And yes, they should probably be worried.)

If you haven’t already been inspired by the description above, let me phrase the question you ought to be asking: how is this system going to avoid being a massive privacy nightmare?

Let me count the concerns:

- If your device is constantly emitting a BLE signal that uniquely identifies it, the whole world is going to have (yet another) way to track you. Marketers already use WiFi and Bluetooth MAC addresses to do this: Find My could create yet another tracking channel.

- It also exposes the phones who are doing the tracking. These people are now going to be sending their current location to Apple (which they may or may not already be doing). Now they’ll also be potentially sharing this information with strangers who “lose” their devices. That could go badly.

- Scammers might also run active attacks in which they fake the location of your device. While this seems unlikely, people will always surprise you.

The good news is that Apple claims that their system actually does provide strong privacy, and that it accomplishes this using clever cryptography. But as is typical, they’ve declined to give out the details how they’re going to do it. Andy Greenberg talked me through an incomplete technical description that Apple provided to Wired, so that provides many hints. Unfortunately, what Apple provided still leaves huge gaps. It’s into those gaps that I’m going to fill in my best guess for what Apple is actually doing.

A big caveat: much of this could be totally wrong. I’ll update it relentlessly when Apple tells us more.

Some quick problem-setting

To lay out our scenario, we need to bring several devices into the picture. For inspiration, we’ll draw from the 1950s television series “Lassie”.

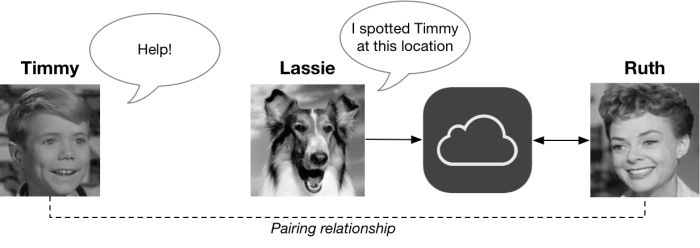

A first device, which we’ll call Timmy, is “lost”. Timmy has a BLE radio but no GPS or connection to the Internet. Fortunately, he’s been previously paired with a second device called Ruth, who wants to find him. Our protagonist is Lassie: she’s a random (and unknowing) stranger’s iPhone, and we’ll assume that she has at least an occasional Internet connection and solid GPS. She is also a very good girl. The networked devices communicate via Apple’s iCloud servers, as shown below:

(Since Timmy and Ruth have to be paired ahead of time, it’s likely they’ll both be devices owned by the same person. Did I mention that you’ll need to buy two Apple devices to make this system work? That’s also just fine for Apple.)

(Since Timmy and Ruth have to be paired ahead of time, it’s likely they’ll both be devices owned by the same person. Did I mention that you’ll need to buy two Apple devices to make this system work? That’s also just fine for Apple.)

Since this is a security system, the first question you should ask is: who’s the bad guy? The answer in this setting is unfortunate: everyone is potentially a bad guy. That’s what makes this problem so exciting.

Keeping Timmy anonymous

The most critical aspect of this system is that we need to keep unauthorized third parties from tracking Timmy, especially when he’s not lost. This precludes some pretty obvious solutions, like having the Timmy device simply shout “Hi my name is Timmy, please call my mom Ruth and let her know I’m lost.” It also precludes just about any unchanging static identifier, even an opaque and random-looking one.

This last requirement is inspired by the development of services that abuse static identifiers broadcast by your devices (e.g., your WiFi MAC address) to track devices as you walk around. Apple has been fighting this — with mixed success — by randomizing things like MAC addresses. If Apple added a static tracking identifier to support the “Find My” system, all of these problems could get much worse.

This requirement means that any messages broadcast by Timmy have to be opaque — and moreover, the contents of these messages must change, relatively frequently, to new values that can’t be linked to the old ones. One obvious way to realize this is to have Timmy and Ruth agree on a long list of random “pseudonyms” for Timmy, and have Timmy pick a different one each time.

This helps a lot. Each time Lassie sees some (unknown) device broadcasting an identifier, she won’t know if it belongs to Timmy: but she can send it up to Apple’s servers along with her own GPS location. In the event that Timmy ever gets lost, Ruth can ask Apple to search for every single one of Timmy‘s possible pseudonyms. Since neither nobody outside of Apple ever learns this list, and even Apple only learns it after someone gets lost, this approach prevents most tracking.

A slightly more efficient way to implement this idea is to use a cryptographic function (like a MAC or hash function) in order to generate the list of pseudonyms from a single short “seed” that both Timmy and Ruth will keep a copy of. This is nice because the data stored by each party will be very small. However, to find Timmy, Ruth must still send all of the pseudonyms — or her “seed” — up to Apple, who will have to search its database for each one.

Hiding Lassie’s location

The pseudonym approach described above should work well to keep Timmy‘s identity hidden from Lassie, and even from Apple (up until the point that Ruth searches for it.) However, it’s got a big drawback: it doesn’t hide Lassie‘s GPS coordinates.

This is bad for at least a couple of reasons. Each time Lassie detects some device broadcasting a message, she needs to transmit her current position (along with the pseudonym she sees) to Apple’s servers. This means Lassie is constantly telling Apple where she is. And moreover, even if Apple promises not to store Lassie‘s identity, the result of all these messages is a huge centralized database that shows every GPS location where some Apple device has been detected.

Note that this data, in the aggregate, can be pretty revealing. Yes, the identifiers of the devices might be pseudonyms — but that doesn’t make the information useless. For example: a record showing that some Apple device is broadcasting from my home address at certain hours of the day would probably reveal when I’m in my house.

An obvious way to prevent this data from being revealed to Apple is to encrypt it — so that only parties who actually need to know the location of a device can see this information. If Lassie picks up a broadcast from Timmy, then the only person who actually needs to know Lassie‘s GPS location is Ruth. To keep this information private, Lassie should encrypt her coordinates under Ruth’s encryption key.

This, of course, raises a problem: how does Lassie get Ruth‘s key? An obvious solution is for Timmy to shout out Ruth’s public key as part of every broadcast he makes. Of course, this would produce a static identifier that would make Timmy‘s broadcasts linkable again.

To solve that problem, we need Ruth to have many unlinkable public keys, so that Timmy can give out a different one with each broadcast. One way to do this is have Ruth and Timmy generate many different shared keypairs (or generate many from some shared seed). But this is annoying and involves Ruth storing many secret keys. And in fact, the identifiers we mentioned in the previous section can be derived by hashing each public key.

A slightly better approach (that Apple may not employ) makes use of key randomization. This is a feature provided by cryptosystems like Elgamal: it allows any party to randomize a public key, so that the randomized key is completely unlinkable to the original. The best part of this feature is that Ruth can use a single secret key regardless of which randomized version of her public key was used to encrypt.

All of this leads to a final protocol idea. Each time Timmy broadcasts, he uses a fresh pseudonym and a randomized copy of Ruth‘s public key. When Lassie receives a broadcast, she encrypts her GPS coordinates under the public key, and sends the encrypted message to Apple. Ruth can send in Timmy‘s pseudonyms to Apple’s servers, and if Apple finds a match, she can obtain and decrypt the GPS coordinates.

Does this solve all the problems?

The nasty thing about this problem setting is that, with many weird edge cases, there just isn’t a perfect solution. For example, what if Timmy is evil and wants to make Lassie reveal her location to Apple? What if Old Man Smithers tries to kidnap Lassie?

At a certain point, the answer to these question is just to say that we’ve done our best: any remaining problems will have to be outside the threat model. Sometimes even Lassie knows when to quit.

Just one small point, I imagine that this will be accessible through icloud.com, as with the existing Find My Phone – so probably no need for two apple devices.

Right now, Apple suggested that two devices are needed. This is from the Wired article linked above:

> The solution to that paradox, it turns out, is a trick that requires you to own at least two Apple devices. Each one emits a constantly changing key that nearby Apple devices use to encrypt and upload your geolocation data, such that only the other Apple device you own possesses the key to decrypt those locations.

and

> When you first set up Find My on your Apple devices—and Apple confirmed you do need at least two devices for this feature to work—it generates an unguessable private key that’s shared on all those devices via end-to-end encrypted communication, so that only those machines possess the key.

I assume they are using mesh network…

😉

Another problem with all these radio frequency emissions not requiring any fancy decryption is that it allows for another kind of bad act. If a thief knows to look for Bluetooth emissions using a phone app, they can pick the right car to break into so they can steal something of value. This was reported to me by a victim whose car (along with several others) was targeted. I thought at the time it might have been WiFi leakage but Bluetooth sounds more likely.

True, you may see a bluetooth broadcast and surmise an Apple device exists somewhere closeby. But it may also makes the device less appealing, knowing that this Apple device will always be trying to phone home. It would be harder to resell as a whole to someone else. You could still sell parts, but that isn’t as valuable as a whole, clean device.

What’s as yet unclear to me is whether the devices will continue to emit BLE messages even if they’re nominally “powered off.” If they don’t, and if there’s no option of requiring a passcode/FaceID for powering off, then it’s not much better as a theft deterrent than the current iteration of Find My Phone.

You can just wrap stuff with tin foil and no-one is going to track that…

That’s the thing. No one ever is going to warp there stuff into tin foil. No one is ever going to bother with anything that has to be done additionally. Even if there would be an option provided by Apple to turn off this service, 99% of users would still snitch on the 1% left over devices. Going into some menu is tedious.

Sure, and then they can be certain to only take them out in sanitized areas. But the activation-locked devices aren’t that valuable anyway. You don’t have to make it impossible to steal them to have an impact on criminal behavior; you only need to make it that much more inconvenient.

You shouldn’t need to use ElGamal, you can solve this with just ECC and ECDH. (*It might require a slightly larger data payload.)

EC-Elgamal and ECDH (used as an encryption scheme rather than a key exchange) are basically the same thing. They both have the same randomization properties, if you instantiate them in an appropriate curve.

Two additional cryptographic techniques can improve the proposed solution.

1) Time synchronization. By assuming that Timmy, Lassie, Apple and Ruth are communicating with low network latency, and that Timmy’s offline time drift is relatively low, then Timmy can encrypt and Ruth can decrypt using an additional side channel of information: current time. This removes the need for a seed since protection against seed predictability is not required.

2) Rainbow hashing. By applying hash^N(x), one value can produce many values and any of those values can be looked up by comparing to a final value.

New features:

1) New identifier every second, difficult to correlate.

2) No seed, minimize data transfer and deanonymizing risk.

Approximate implementation:

Assume parameter MAXIMUM_NETWORK_DELAY + MAXIMUM_TIME_DRIFT = 60

1) Timmy and Ruth agree on a UUID as the identifier.

2) Timmy and Ruth synchronously hash UUID once per second (i.e. hash(UUID), hash(hash(UUID)), …).

3) Every minute, Timmy and Ruth increment UUID.

4) Timmy sends hashed UUID and encrypted payload to Lassie and Apple. This is hash^N(UUID)

5) Ruth sends hash^60(UUID) to Apple

6) Apple calculates hash(UUID), hash^2(UUID), …, hash^60(UUID) of every hash in its database.

7) If match is found, proceed as per original article.

How does Timmy know he is lost? Ruth is looking for Timmy, but how does she get Timmy to broadcast with BLE if he is offline to receive Ruth’s message?

Right, that’s what I was wondering. Does this mean that Lassie is reporting location for *every* apple device She encounters? Seems like a waste of energy…

Lassie should report that she has seen a BLE broadcast, but shouldn’t send her own location with the announcement unless Apple responds and says that this particular event was under active watch (i.e. has been reported by Ruth).

We can also extend that; Lassie keeps a history of observed BLE broadcasts and their locations; and Apple can ask for a historical location, to answer Ruth’s question of “where was Timmy an hour ago?”