Over the past several months, Signal has been rolling out a raft of new features to make its app more usable. One of those features has recently been raising a bit of controversy with users. This is a contact list backup feature based on a new system called Secure Value Recovery, or SVR. The SVR feature allows Signal to upload your contacts into Signal’s servers without — ostensibly — even Signal itself being able to access it.

The new Signal approach has created some trauma with security people, due to the fact that it was recently enabled without a particularly clear explanation. For a shorter summary of the issue, see this article. In this post, I want to delve a little bit deeper into why these decisions have made me so concerned, and what Signal is doing to try to mitigate those concerns.

What’s Signal, and why does it matter?

For those who aren’t familiar with it, Signal is an open-source app developed by Moxie Marlinkspike’s Signal Technology Foundation. Signal has received a lot of love from the security community. There are basically two reasons for this. First: the Signal app has served as a sort of technology demo for the Signal Protocol, which is the fundamental underlying cryptography that powers popular apps like Facebook Messenger and WhatsApp, and all their billions of users.

Second, the Signal app itself is popular with security-minded people, mostly because the app, with its relatively smaller and more technical user base, has tended towards a no-compromises approach to the security experience. Wherever usability concerns have come into conflict with security, Signal has historically chosen the more cautious and safer approach — as compared to more commercial alternatives like WhatsApp. As a strategy for obtaining large-scale adoption, this is a lousy one. If your goal is to build a really secure messaging product, it’s very impressive.

Let me give an example.

Encrypted messengers like WhatsApp and Apple’s iMessage routinely back up your text message content and contact lists to remote cloud servers. These backups undo much of the strong security offered by end-to-end encryption — since they make it much easier for hackers and governments to obtain your plaintext content. You can disable these backups, but it’s surprisingly non-obvious to do it right (for me, at least). The larger services justify this backup default by pointing out that their less-technical users tend be more worried about lost message history than by theoretical cloud hacks.

Signal, by contrast, has taken a much more cautious approach to backup. In June of this year, they finally added a way to manually transfer message history from one iPhone to another, and this transfer now involves scanning QR codes. For Android, cloud backup is possible, if users are willing to write down a thirty-digit encryption key. This is probably really annoying for many users, but it’s absolutely fantastic for security. Similarly, since Signal relies entirely on phone numbers in your contacts database (a point that, admittedly, many users hate), it never has to back up your contact lists to a server.

What’s changed recently is that Signal has begun to attract a larger user base. As users with traditional expectations enter the picture, they’ve been unhappy with Signal’s limitations. In response, the signal developers have begun to explore ways by which they can offer these features without compromising security. This is just plain challenging, and I feel for the developers.

One area in which they’ve tried to square the circle is with their new solution for contacts backup: a system called “secure value recovery.”

What’s Secure Value Recovery?

Signal’s Secure Value Recovery (SVR) is a cloud-based system that allows users to store encrypted data on Signal’s servers — such that even Signal cannot access it — without the usability headaches that come from traditional encryption key management. At the moment, SVR is being used to store users’ contact lists and not message content, although that data may be on the menu for backup in the future.

The challenge in storing encrypted backup data is that strong encryption requires strong (or “high entropy”) cryptographic keys and passwords. Since most of us are terrible at selecting, let alone remembering strong passwords, this poses a challenging problem. Moreover, these keys can’t just be stored on your device — since the whole point of backup is to deal with lost devices.

The goal of SVR is to allow users to protect their data with much weaker passwords that humans can actually can memorize, such as a 4-digit PIN. With traditional password-based encryption, such passwords would be completely insecure: a motivated attacker who obtained your encrypted data from the Signal servers could simply run a dictionary attack — trying all 10,000 such passwords in a few seconds or minutes, and thus obtaining your data.

Signal’s SVR solves this problem in an age-old way: it introduces a computer that even Signal can’t hack. More specifically, Signal makes use of a new extension to Intel processors called Software Guard eXtensions, or SGX. SGX allows users to write programs, called “enclaves”, that run in a special virtualized processor mode. In this mode, enclaves are invisible to and untouchable by all other software on a computer, including the operating system. If storage is needed, enclaves can persistently store (or “seal”) data, such that any attempt to tamper with the program will render that data inaccessible. (Update: as a note, Signal’s SVR does not seal data persistently. I included this in the draft thinking that they did, but I misremembered this from the technology preview.)

Signal’s SVR deploys such an enclave program on the Signal servers. This program performs a simple function: for each user, it generates and stores a random 256-bit cryptographic secret “seed” along with a hash of the user’s PIN. When a user contacts the server, it can present a hash of its PIN to the enclave and ask for the cryptographic seed. If the hash matches what the enclave has stored, the server delivers the secret seed to the client, which can mix it together with the PIN. The result is a cryptographically strong encryption key that can be used to encrypt or decrypt backup data. (Update: thanks to Dino Dai Zovi for correcting some details in here.)

The key to this approach is that the encryption key now depends on both the user’s password and a strong cryptographic secret stored by an SGX enclave on the server. If SGX does its job, then even a user who hacks into the Signal servers — and here we include the Signal developers themselves, perhaps operating under duress — will be unable to retrieve this user’s secret value. The only way to access the backup encryption key is to actually run the enclave program and enter the user’s hashed PIN. To prevent brute-force guessing, the enclave keeps track of the number of incorrect PIN-entry attempts, and will only allow a limited number before it locks that user’s account entirely.

This is an elegant approach, and it’s conceptually quite similar to systems already deployed by Apple and Google, who use dedicated Hardware Security Modules to implement the trusted component, rather than SGX.

The key weakness of the SVR approach is that it depends strongly on the security and integrity of SGX execution. As we’ll discuss in just a moment, SGX does not exactly have a spotless record.

What happens if SGX isn’t secure?

Anytime you encounter a system that relies fundamentally on the trustworthiness of some component — particularly a component that exists in commodity hardware — your first question should be: “what happens if that component isn’t actually trustworthy?”

With SVR that question takes on a great deal of relevance.

Let’s step back. Recall that the goal of SVR is to ensure three things:

- The backup encryption key is based, at least in part, on the user’s chosen password. Strong passwords mean strong encryption keys.

- Even with a weak password, the encryption key will still have cryptographic strength. This comes from the integration of a random seed that gets chosen and stored by SGX.

- No attacker will be able to brute-force their way through the password space. This is enforced by SGX via guessing limits.

Note that only the first goal is really enforced by cryptography itself. And this goal will only be achieved if the user selects a strong (high-entropy) password. For an example of what that looks like, see the picture at right.

The remaining goals rely entirely on the integrity of SGX. So let’s play devil’s advocate, and think about what happens to SVR if SGX is not secure.

If an attacker is able to dump the memory space of a running Signal SGX enclave, they’ll be able to expose secret seed values as well as user password hashes. With those values in hand, attackers can run a basic offline dictionary attack to recover the user’s backup keys and passphrase. The difficulty of completing this attack depends entirely on the strength of a user’s password. If it’s a BIP39 phrase, you’ll be fine. If it’s a 4-digit PIN, as strongly encouraged by the UI of the Signal app, you will not be.

(The sensitivity of this data becomes even worse if your PIN happens to be the same as your phone passcode. Make sure it’s not!)

Similarly, if an attacker is able to compromise the integrity of SGX execution: for example, to cause the enclave to run using stale “state” rather than new data, then they might be able to defeat the limits on the number of incorrect password (“retry”) attempts. This would allow the attacker to run an active guessing attack on the enclave until they recover your PIN. (Edit: As noted above, this shouldn’t be relevant in SVR because data is stored only in RAM, and never sealed or written to disk.)

A final, and more subtle concern comes from the fact that Signal’s SVR also allows for “replication” of the backup database. This addresses a real concern on Signal’s part that the backup server could fail — resulting in the loss of all user backup data. This would be a UX nightmare, and understandably, Signal does not want users to be exposed to it.

To deal with this, Signal’s operators can spin up a new instance of the Signal server on a cloud provider. The new instance will have a second copy of the SGX enclave software, and this software can request a copy of the full seed database from the original enclave. (There’s even a sophisticated consensus protocol to make sure the two copies stay in agreement about the state of the retry counters, once this copy is made.)

The important thing to keep in mind is that the security of this replication process depends entirely on the idea that the original enclave will only hand over its data to another instance of the same enclave software running on a secure SGX-enabled processor. If it was possible to trick the original enclave about the status of the new enclave — for example, to convince it to hand the database over to a system that was merely emulating an SGX enclave in normal execution mode — then a compromised Signal operator would be able to use this mechanism to exfiltrate a plaintext copy of the database. This would break the system entirely.

Prevention against this attack is accomplished via another feature of Intel SGX, which is called “remote attestation“. Essentially, each Intel processor contains a unique digital signing key that allows it to attest to the fact that it’s a legitimate Intel processor, and it’s running a specific piece of enclave software. These signatures can be verified with the assistance of Intel, which allows enclaves to verify that they’re talking directly to another legitimate enclave.

The power of this system also contains its fragility: if a single SGX attestation key were to be extracted from a single SGX-enabled processor, this would provide a backdoor for any entity who is able to compromise the Signal developers.

With these concerns in mind, it’s worth asking how realistic it is that SGX will meet the high security bar it needs to make this system work.

So how has SGX done so far?

Not well, to be honest. A list of SGX compromises is given on Wikipedia here, but this doesn’t really tell the whole story.

The various attacks against SGX are many and varied, but largely have a common cause: SGX is designed to provide virtualized execution of programs on a complex general-purpose processor, and said processors have a lot of weird and unexplored behavior. If an attacker can get the processor to misbehave, this will in turn undermine the security of SGX.

This leads to attacks such as “Plundervolt“, where malicious software is able to tamper with the voltage level of the processor in real-time, causing faults that leak critical data. It includes attacks that leverage glitches in the way that enclaves are loaded, which can allow an attacker to inject malicious code in place of a proper enclave.

The scariest attacks against SGX rely on “speculative execution” side channels, which can allow an attacker to extract secrets from SGX — up to and including basically all of the working memory used by an enclave. This could allow extraction of values like the seed keys used by Signal’s SVR, or the sealing keys (used to encrypt that data on disk.) Worse, these attacks have not once but twice been successful at extracting cryptographic signing keys used to perform cryptographic attestation. The most recent one was patched just a few weeks ago. These are very much live attacks, and you can bet that more will be forthcoming.

This last part is bad for SVR, because if an attacker can extract even a single copy of one processor’s attestation signing keys, and can compromise a Signal admin’s secrets, they can potentially force Signal to replicate their database onto a simulated SGX enclave that isn’t actually running inside SGX. Once SVR replicated its database to the system, everyone’s secret seed data would be available in plaintext.

But what really scares me is that these attacks I’ve listed above are simply the result of academic exploration of the system. At any given point in the past two years I’ve been able to have a beer with someone like Daniel Genkin of U. Mich or Daniel Gruss of TU Graz, and know that either of these professors (or their teams) is sitting on at least one catastrophic unpatched vulnerability in SGX. These are very smart people. But they are not the only smart people in the world. And there are smart people with more resources out there who would very much like access to backed-up Signal data.

It’s worth pointing out that most of the above attacks are software-only attacks — that is, they assume an attacker who is only able to get logical access to a server. The attacks are so restricted because SGX is not really designed to defend against sophisticated physical attackers, who might attempt to tap the system bus or make direct attempts to unpackage and attach probes to the processor itself. While these attacks are costly and challenging, there are certainly agencies that would have no difficulty executing them.

Finally, I should also mention that the security of the SVR approach assumes Intel is honest. Which, frankly, is probably an assumption we’re already making. So let’s punt on it.

So what’s the big deal?

My major issue with SVR is that it’s something I basically don’t want, and don’t trust. I’m happy with Signal offering it as an option to users, as long as users are allowed to choose not to use it. Unfortunately, up until this week, Signal was not giving users that choice.

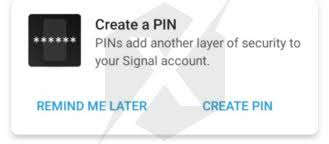

More concretely: a few weeks ago Signal began nagging users to create a PIN code. The app itself didn’t really explain well that setting this PIN would start SVR backups. Many people I spoke to said they believed that the PIN was for protecting local storage, or to protect their account from hijacking.

And Signal didn’t just ask for this PIN. It followed a common “dark pattern” born in Silicon Valley of basically forcing users to add the PIN, first by nagging them repeatedly and then ultimately by blocking access to the entire app with a giant modal dialog.

This is bad behavior on its merits, and more critically: it probably doesn’t result in good PIN choices. To make it go away, I chose the simplest PIN that the app would allow me to, which was 9512. I assume many other users simply entered their phone passcodes, which is a nasty security risk all on its own.

Some will say that this is no big deal, since SVR currently protects only users’ contact lists — and those are already stored in cleartext on competing messaging systems. This is, in fact, one of the arguments Moxie has made.

But I don’t buy this. Nobody is going to engineer something as complex as Signal’s SVR just to store contact lists. Once you have a hammer like SVR, you’re going to want to use it to knock down other nails. You’ll find other critical data that users are tired of losing, and you’ll apply SVR to back that data up. Since message content backups are one of the bigger pain points in Signal’s user experience, sooner or later you’ll want to apply SVR to solving that problem too.

In the past, my view was that this would be fine — since Signal would surely give users the ability to opt into or out of message backups. The recent decisions by Signal have shaken my confidence.

Addendum: what does Signal say about this?

Originally this post had a section that summarized a discussion I had with Moxie around this issue. Out of respect for Moxie, I’ve removed some of this at his request because I think it’s more fair to let Moxie address the issue directly without being filtered through me.

So in this rewritten section I simply want to make the point that now (following some discussion on Twitter), there is a workaround to this issue. You can either choose to set a high-entropy passcode such as a BIP39 phrase, and then forget it. This will not screw up your account unless you turn on the “registration lock” feature. Or you can use the new “Disable PIN” advanced feature in Signal’s latest beta, which does essentially the same thing in an automated way. This seems like a good addition, and while I still think there’s a discussion to be had around consent and opt-in, this is a start for now.

Great post. Thank you. I am in tech and I didn’t realize this is why Signal was asking for a pin.

I do appreciate Signal trying to be a bit more user friendly but the main reason I like Signal is because it isn’t like all the other messaging apps. Will be very interesting to see where they go in the future. I hope they take your suggestions to heart

“limits on the number of incorrect password (“retry”) attempts” – anyone willing to bet this is configurable and even if not an authorized change to the code at the request/order of a government turns it off.

The use of pin that you’ve described surprised me.

FWIW I was just looking at the settings and noticed it explains “Your profile, settings and contacts will restore when you reinstall Signal” so it seems it’s not limited to just contacts?

spelling “Marlinkspike”

sense? “I asked Moxie why Signal could just do this work for me, and he said that they could.”

Is there any particular reason that you are using AMP links?

https://www.google.com/amp/s/www.vice.com/amp/en_us/article/pkyzek/signal-new-pin-feature-worries-cybersecurity-experts

I’ve been concerned about Signal’s (the company) integrity for some time. *Quietly* dropping support for encrypted SMS, making use of their centralized servers mandatory, accessing the phones contacts at all, hostility towards independent builds (fdroid), this. And most (I think they accessed the contacts from the bginning, but am not sure) of these started cropping up *after* Snowden. Now, it may be that they are all in response to increased user numbers, but I am distinctly uncomfortable… If I wanted to do subvert the “ecosystem” many of these steps I would have taken as well…

Thank you for such an elaborate critique.

Signal continues to be a masterpiece and a literal life saver for people in my country (an American-backed, body-sawing, famine-causing absolute monarchy, if you want to guess). At the same time, every day, we are entrusting the centralized team behind Signal with ever-increasing decisions, which, to me, is the most major exploit in the Signal design. I have heavily used the quote you gave (“I literally discovered a line of drool running down my face.”) to promote the app and I am grateful to see you taking such a firm stance.

I want to point out an error in the role of the SGX enclave. The enclave isn’t the one generating the 256bit key. Instead the user client generates the 256bit key (called masterkey in the code) and uses the user’s choice of PIN to store an encrypted copy of this key in the SGX enclave. The user data is stored using keys derived from the masterkey and the user PIN plays no role in this. This is from the reading of the Android app’s code and differs slightly from the blog post. The advantage in the change is that a change in the user PIN doesn’t necessitate re-encryption of the data already uploaded, just the encrypted copy of the masterkey in the enclave needs to be updated.

In what’s the big deal section, you mention that you don’t trust SVR. Does the fact that you can use as strong a password as you want, removing SGX from the picture, change your opinion on this (Moxie’s workaround)? If it doesn’t, please do elaborate. As you mentioned, the opt-out they are building still uploads contacts.

Please do ask Moxie why encryption keys aren’t backed up now. If he thinks that the design is secure enough for contact lists, why isn’t it secure enough for encryption keys? Being able to restore them would mean that safety number changes become lot less infrequent increasing security for everyone.

Why can message backup be opt-in but contact backup has to be mandatory? Maybe Moxie can blame the cost for transporting and storing all the data. Even the user is hit with costs if there are no incremental backups. Is this the only difficulty?

As you point out, SVR is looking like a very dangerous slippery slope and users don’t have much control over the decisions and it isn’t easy to switch because of a centralized server. The only saving grace is that the client can be modified so that none of your data is uploaded.

Backing up encryption keys would possibly be the worst decision ever made. The point of E2E crypto is to keep keys on the device and never store them elsewhere. Changing safety numbers is by design and it’s important to be notified of such. Being able to backup keys would be terrible from a security standpoint, there would really be no reason to and it could only harm people. It could allow someone to impersonate you or read all future messages if they got a hold of your pin.

You also missed another dark pattern. Those who had opted for Registration Lock (for which a 4 digit PIN was good enough due to rate limitations placed on phone number verification) were silently upgraded to this new system and I don’t think they were even informed. Probably only those who were following the blog posts and community beta feedback threads knew what would happen.

Taking consent for one thing and repurposing it into something entirely different is unexpected behavior.

That’s right. I only wanted Registration Lock, and when creating the PIN I don’t remember seeing any warning that my contacts would be stored on the server. Now it looks like I’ll have to give up on Registration Lock if I don’t want my contacts on the server!

Agreed – and we cannot opt out of contact backup if we want to retain our Registration Lock, even in the most recent beta version. To turn off contact backup, we have to disable the PIN, which disables Registration Lock. 😠

Look at the latest replies by Greyson in the beta feedback thread on Android v4.63. There is no option to disable contact backup unless you modify the source code.

Not letting me reply directly to concern signal user’s comment. “Look at the latest replies by Greyson in the beta feedback thread on Android v4.63. There is no option to disable contact backup unless you modify the source code.”

This is simply wrong and misinformation.

Krepptil, I made a typo. I should’ve said v4.66. Look at the link posted by Oscar and scroll down. To quote Greyson,

“We still create a master key locally and derive a storage key from the master key. That storage key is still used to encrypt data that is uploaded to storage service. Storage service is still necessary for future features involving linked devices.”

Thanks for your great post.

About the SVR replicas. It should be noted that Signal stores the user PIN or passphrase in plaintext inside its SQL encrypted database. This is not a bug, but the intended behavior of the latest Signal for Android (at the time of writing this). Check out the forum for details: https://community.signalusers.org/t/beta-feedback-for-the-upcoming-android-4-66-release/15579/28

We suggested them to stop doing it, as it’s bad practice to store passwords in clear. Even more when the app only stores the value and never reads it back. Maybe for PIN reminders? Certainly not, because the app stores and compares the hash of the PIN for reminders instead.

How is it related with the vulnerability of replication in SVR database? Given that the app has access to the user PIN, it can encrypt a new MasterKey and re-upload everything in case of server failure. And it could do it WITHOUT any user interaction. Moxie’s reason of “bad UX” to not get rid of replicas doesn’t come into play anymore.

Why took the risk of storing the PIN in plaintext? I don’t know. But let’s assume it’s a hidden fail-safe mechanism for SVR. Then why the replicas and the vulnerabilities that goes with it? We have the worst of both approaches now.

I am and have been a Signal user for a very long time. I love it. I trust Marlinspike having met him and taken a course he taught in the past.

I am however, extremely disappointed in how this was communicated. At a minimum my ask is Signal make a formal statement they will never in any circumstance take data without first informing the user base that they will be doing so. I can not help but feel this was done on purpose as they could predict the outcry. What is done is done, but I would like there committment it would never happen again.

-mike

“These signatures can be verified with the assistance of Intel, which allows enclaves to verify that they’re talking directly to another legitimate enclave. The power of this system also contains its fragility: if a single SGX attestation key were to be extracted from a single SGX-enabled processor, this would provide a backdoor for any entity who is able to compromise the Signal developers.”

I don’t think this is any different from any other cloud-level / HSM solution. Once you have a compromised version of the deployed HSM, AND you have compromised a Signal developer (i.e. admin who can deploy new HSMs) then the system is broken.

Thanks to having a programmable TEE here you can actually do something more fun. I think you could use local sealing to bind the critical keys to the HW, and the enclave can implement any kind of provisioning/authorization scheme to enable migration and backup, including secret sharing across multiple Signal admins, other TEEs or HW tokens.

However, even if they were to use proper HSMs and fancy authorization schemes, I don’t think there is any product available that can withstand physical attacks beyond a year of PhD time.

I’m surprised though that you don’t point out this more fundamental problem here, which is trying to build a long-term secure backup solution against a nation-state adversary using (due to bad UX) an insecure PIN and a mix of asymmetric authentication/attestation schemes. This is such a bad design that I feel like I’m the one overlooking something obvious..

The whole user story also does not seem to work well with GDPR. Storing backups without telling me, with a PIN that I have set in a different context without having been properly informed about its usage? Someone has been socially distanced for too long.

Thank you so much for taking the time to write this all down. I’d consider myself a “technically versatile user”, using Signal since the TextSecure days, joined the Android Beta program years ago, and I knew that Signal was planning to use the SGX enclave, but I’m surprised that the PIN was somehow part of all this.

Thanks again for explaining all this. I only hope that the Signal devs reconsider some UI choices of the recent past to address all this.

While cloud backups seem to be theoretically possible on Android the support site describes basically a way to create local backups that can be manually transferred. There apparently currently is no convenient way to easily backup things to the cloud like there is on services like WhatsApp. This also makes transitions to a new device much more inconvenient and is a reason why I stopped using Signal for now on my new smartphone. Maybe that can be corrected in the article above.

I’m a Signal user since the times it was still textsecure and redphone and I’m ever since a beta tester and occasional bug reporter. And I guessed it had something to do with some unwanted uploads but couldn’t really tell. Also the crap started nagging more and more and I had to see what can I do with my family members being puzzled… And finally I also got forced myself into something I explicitly don’t want!

Also when the SGX flaw was discovered I had expected that signal is looking for alternatives to their address book comparison thingie, but hey let’s use SGX for even more critical stuff…

I always defended moxi and felt he wants our good. But he(or the foundation or whoever) have become some sort of dictatorship. Recently I placed a feature request in the forum, then a nice discussion started in the flow of it someone mentioned that there was a similar request somewhere (in my opinion under a wrong category) and immediately a mod came, locked and hid the thread.

There is only one opinion allowed. Without a better option I will stay with Signal but no more with the backing from the bottom of my heart…

Thanks Matthew for talking about such things.

Get on Birdie free as a bird and one shot deal.

Download Pix-Art or Conversations from F-Droid on Android.

Enjoy the Freedom!

–

DarkiJah

Pass the Birdie and spread the Word 😉

Is there a fully functional alternative implementation/fork of signal server?